Checkpoints

Create the managementnet network

/ 20

Create the privatenet network

/ 30

Create the firewall rules for managementnet

/ 15

Create the firewall rules for privatenet

/ 15

Create the managementnet-vm instance

/ 5

Create the privatenet-vm instance

/ 5

Create a VM instance with multiple network interfaces

/ 10

Multiple VPC Networks

GSP211

Overview

Virtual Private Cloud (VPC) networks allow you to maintain isolated environments within a larger cloud structure, giving you granular control over data protection, network access, and application security.

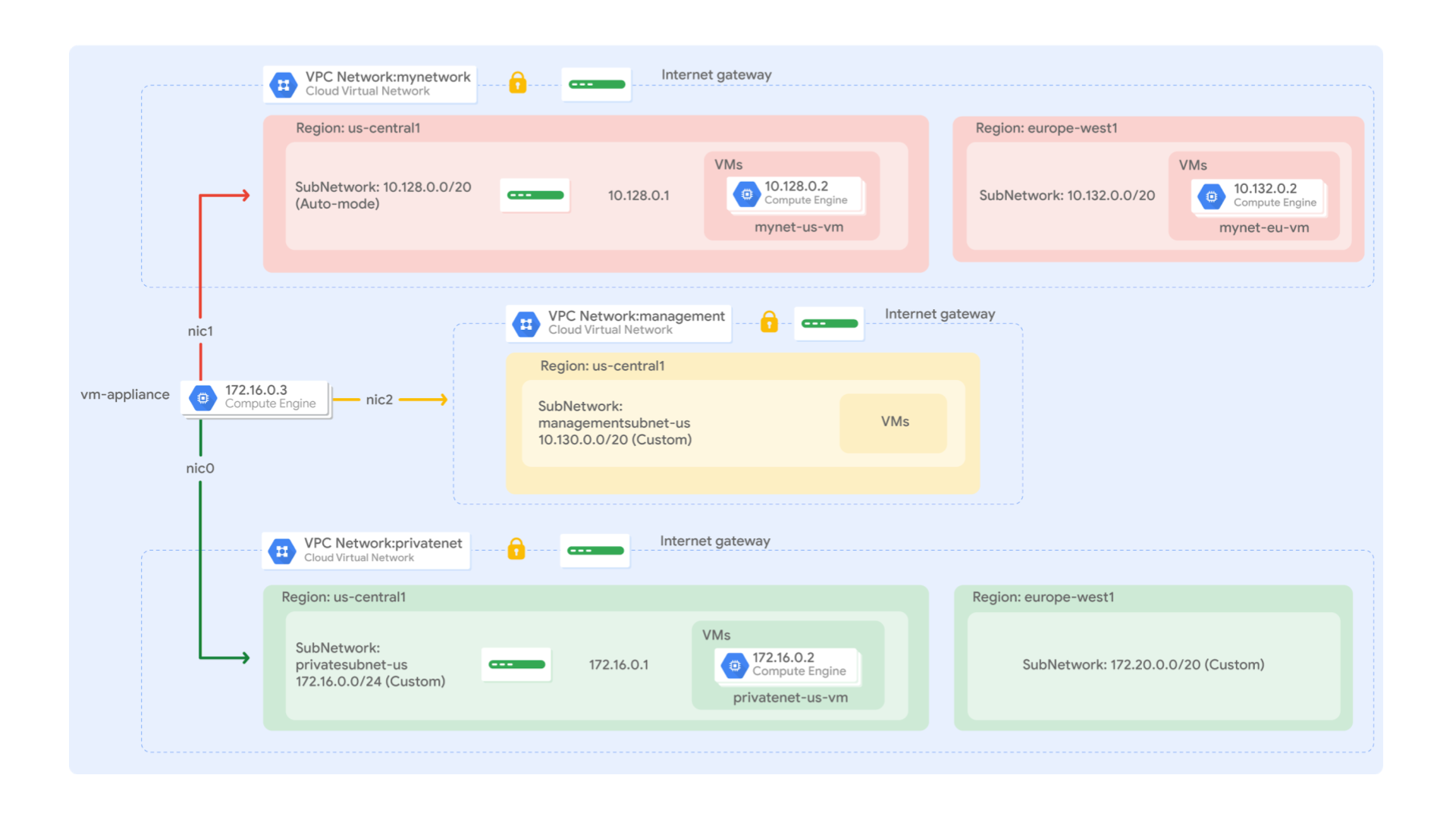

In this lab you create several VPC networks and VM instances, then test connectivity across networks. Specifically, you create two custom mode networks (managementnet and privatenet) with firewall rules and VM instances as shown in this network diagram:

The mynetwork network with its firewall rules and two VM instances (mynet-us-vm and mynet-eu-vm) have already been created for you for this lab.

Objectives

In this lab, you will learn how to perform the following tasks:

- Create custom mode VPC networks with firewall rules

- Create VM instances using Compute Engine

- Explore the connectivity for VM instances across VPC networks

- Create a VM instance with multiple network interfaces

Setup and requirements

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources will be made available to you.

This hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

- Time to complete the lab---remember, once you start, you cannot pause a lab.

How to start your lab and sign in to the Google Cloud console

-

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is the Lab Details panel with the following:

- The Open Google Cloud console button

- Time remaining

- The temporary credentials that you must use for this lab

- Other information, if needed, to step through this lab

-

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account. -

If necessary, copy the Username below and paste it into the Sign in dialog.

{{{user_0.username | "Username"}}} You can also find the Username in the Lab Details panel.

-

Click Next.

-

Copy the Password below and paste it into the Welcome dialog.

{{{user_0.password | "Password"}}} You can also find the Password in the Lab Details panel.

-

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials. Note: Using your own Google Cloud account for this lab may incur extra charges. -

Click through the subsequent pages:

- Accept the terms and conditions.

- Do not add recovery options or two-factor authentication (because this is a temporary account).

- Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

Activate Cloud Shell

Cloud Shell is a virtual machine that is loaded with development tools. It offers a persistent 5GB home directory and runs on the Google Cloud. Cloud Shell provides command-line access to your Google Cloud resources.

- Click Activate Cloud Shell

at the top of the Google Cloud console.

When you are connected, you are already authenticated, and the project is set to your Project_ID,

gcloud is the command-line tool for Google Cloud. It comes pre-installed on Cloud Shell and supports tab-completion.

- (Optional) You can list the active account name with this command:

- Click Authorize.

Output:

- (Optional) You can list the project ID with this command:

Output:

gcloud, in Google Cloud, refer to the gcloud CLI overview guide.

Task 1. Create custom mode VPC networks with firewall rules

Create two custom networks managementnet and privatenet, along with firewall rules to allow SSH, ICMP, and RDP ingress traffic.

Create the managementnet network

Create the managementnet network using the Cloud console.

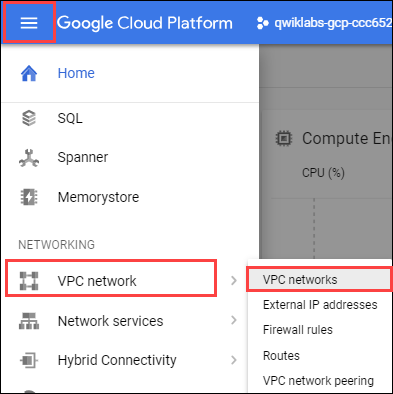

- In the Cloud console, navigate to Navigation menu (

) > VPC network > VPC networks.

-

Notice the default and mynetwork networks with their subnets.

Each Google Cloud project starts with the default network. In addition, the mynetwork network has been premade as part of your network diagram.

-

Click Create VPC Network.

-

Set the Name to

managementnet. -

For Subnet creation mode, click Custom.

-

Set the following values, leave all other values at their defaults:

Property Value (type value or select option as specified) Name managementsubnet-us Region IPv4 range 10.130.0.0/20 -

Click Done.

-

Click EQUIVALENT COMMAND LINE.

These commands illustrate that networks and subnets can be created using the Cloud Shell command line. You will create the privatenet network using these commands with similar parameters.

-

Click Close.

-

Click Create.

Test Completed Task

Click Check my progress to verify your performed task. If you have successfully created a managementnet network, you will see an assessment score.

Create the privatenet network

Create the privatenet network using the Cloud Shell command line.

- Run the following command to create the privatenet network:

- Run the following command to create the privatesubnet-us subnet:

- Run the following command to create the privatesubnet-eu subnet:

Test Completed Task

Click Check my progress to verify your performed task. If you have successfully created a privatenet network, you will see an assessment score.

- Run the following command to list the available VPC networks:

The output should look like this:

- Run the following command to list the available VPC subnets (sorted by VPC network):

The output should look like this:

- In the Cloud console, navigate to Navigation menu > VPC network > VPC networks.

- You see that the same networks and subnets are listed in the Cloud console.

Create the firewall rules for managementnet

Create firewall rules to allow SSH, ICMP, and RDP ingress traffic to VM instances on the managementnet network.

-

In the Cloud console, navigate to Navigation menu (

) > VPC network > Firewall.

-

Click + Create Firewall Rule.

-

Set the following values, leave all other values at their defaults:

Property Value (type value or select option as specified) Name managementnet-allow-icmp-ssh-rdp Network managementnet Targets All instances in the network Source filter IPv4 Ranges Source IPv4 ranges 0.0.0.0/0 Protocols and ports Specified protocols and ports, and then check tcp, type: 22, 3389; and check Other protocols, type: icmp.

-

Click EQUIVALENT COMMAND LINE.

These commands illustrate that firewall rules can also be created using the Cloud Shell command line. You will create the privatenet's firewall rules using these commands with similar parameters.

-

Click Close.

-

Click Create.

Test Completed Task

Click Check my progress to verify your performed task. If you have successfully created firewall rules for managementnet network, you will see an assessment score.

Create the firewall rules for privatenet

Create the firewall rules for privatenet network using the Cloud Shell command line.

- In Cloud Shell, run the following command to create the privatenet-allow-icmp-ssh-rdp firewall rule:

The output should look like this:

Test Completed Task

Click Check my progress to verify your performed task. If you have successfully created firewall rules for privatenet network, you will see an assessment score.

- Run the following command to list all the firewall rules (sorted by VPC network):

The output should look like this:

The firewall rules for mynetwork network have been created for you. You can define multiple protocols and ports in one firewall rule (privatenet and managementnet), or spread them across multiple rules (default and mynetwork).

- In the Cloud console, navigate to Navigation menu > VPC network > Firewall.

- You see that the same firewall rules are listed in the Cloud console.

Task 2. Create VM instances

Create two VM instances:

- managementnet-us-vm in managementsubnet-us

- privatenet-us-vm in privatesubnet-us

Create the managementnet-us-vm instance

Create the managementnet-us-vm instance using the Cloud console.

-

In the Cloud console, navigate to Navigation menu > Compute Engine > VM instances.

The mynet-eu-vm and mynet-us-vm has been created for you, as part of your network diagram.

-

Click Create instance.

-

Set the following values, leave all other values at their defaults:

Property Value (type value or select option as specified) Name managementnet-us-vm Region Zone Series E2 Machine type e2-micro -

From Advanced options, click Networking, Disks, Security, Management, Sole-tenancy dropdown.

-

Click Networking.

-

For Network interfaces, click the dropdown to edit.

-

Set the following values, leave all other values at their defaults:

Property Value (type value or select option as specified) Network managementnet Subnetwork managementsubnet-us -

Click Done.

-

Click EQUIVALENT CODE.

This illustrate that VM instances can also be created using the Cloud Shell command line. You will create the privatenet-us-vm instance using these commands with similar parameters.

-

Click Create.

Test Completed Task

Click Check my progress to verify your performed task. If you have successfully created VM instance in managementnet network, you will see an assessment score.

Create the privatenet-us-vm instance

Create the privatenet-us-vm instance using the Cloud Shell command line.

- In Cloud Shell, run the following command to create the privatenet-us-vm instance:

The output should look like this:

Test Completed Task

Click Check my progress to verify your performed task. If you have successfully created VM instance in privatenet network, you will see an assessment score.

- Run the following command to list all the VM instances (sorted by zone):

The output should look like this:

-

In the Cloud console, navigate to Navigation menu (

) > Compute Engine > VM instances.

-

You see that the VM instances are listed in the Cloud console.

-

Click on Column display options, then select Network. Click Ok.

There are three instances in

and one instance in . However, these instances are spread across three VPC networks (managementnet, mynetwork and privatenet), with no instance in the same zone and network as another. In the next section, you explore the effect this has on internal connectivity.

Task 3. Explore the connectivity between VM instances

Explore the connectivity between the VM instances. Specifically, determine the effect of having VM instances in the same zone versus having instances in the same VPC network.

Ping the external IP addresses

Ping the external IP addresses of the VM instances to determine if you can reach the instances from the public internet.

-

In the Cloud console, navigate to Navigation menu > Compute Engine > VM instances.

-

Note the external IP addresses for mynet-eu-vm, managementnet-us-vm, and privatenet-us-vm.

-

For mynet-us-vm, click SSH to launch a terminal and connect.

-

To test connectivity to mynet-eu-vm's external IP, run the following command, replacing mynet-eu-vm's external IP:

This should work!

- To test connectivity to managementnet-us-vm's external IP, run the following command, replacing managementnet-us-vm's external IP:

This should work!

- To test connectivity to privatenet-us-vm's external IP, run the following command, replacing privatenet-us-vm's external IP:

This should work!

Ping the internal IP addresses

Ping the internal IP addresses of the VM instances to determine if you can reach the instances from within a VPC network.

- In the Cloud console, navigate to Navigation menu > Compute Engine > VM instances.

- Note the internal IP addresses for mynet-eu-vm, managementnet-us-vm, and privatenet-us-vm.

- Return to the SSH terminal for mynet-us-vm.

- To test connectivity to mynet-eu-vm's internal IP, run the following command, replacing mynet-eu-vm's internal IP:

- To test connectivity to managementnet-us-vm's internal IP, run the following command, replacing managementnet-us-vm's internal IP:

- To test connectivity to privatenet-us-vm's internal IP, run the following command, replacing privatenet-us-vm's internal IP:

VPC networks are by default isolated private networking domains. However, no internal IP address communication is allowed between networks, unless you set up mechanisms such as VPC peering or VPN.

Task 4. Create a VM instance with multiple network interfaces

Every instance in a VPC network has a default network interface. You can create additional network interfaces attached to your VMs. Multiple network interfaces enable you to create configurations in which an instance connects directly to several VPC networks (up to 8 interfaces, depending on the instance's type).

Create the VM instance with multiple network interfaces

Create the vm-appliance instance with network interfaces in privatesubnet-us, managementsubnet-us and mynetwork. The CIDR ranges of these subnets do not overlap, which is a requirement for creating a VM with multiple network interface controllers (NICs).

-

In the Cloud console, navigate to Navigation menu > Compute Engine > VM instances.

-

Click Create instance.

-

Set the following values, leave all other values at their defaults:

Property Value (type value or select option as specified) Name vm-appliance Region Zone Series E2 Machine type e2-standard-4

-

From Advanced options, click Networking, Disks, Security, Management, Sole-tenancy dropdown.

-

Click Networking.

-

For Network interfaces, click the dropdown to edit.

-

Set the following values, leave all other values at their defaults:

Property Value (type value or select option as specified) Network privatenet Subnetwork privatesubnet-us -

Click Done.

-

Click Add a network interface.

-

Set the following values, leave all other values at their defaults:

Property Value (type value or select option as specified) Network managementnet Subnetwork managementsubnet-us -

Click Done.

-

Click Add a network interface.

-

Set the following values, leave all other values at their defaults:

Property Value (type value or select option as specified) Network mynetwork Subnetwork mynetwork -

Click Done.

-

Click Create.

Test Completed Task

Click Check my progress to verify your performed task. If you have successfully created VM instance with multiple network interfaces, you will see an assessment score.

Explore the network interface details

Explore the network interface details of vm-appliance within the Cloud console and within the VM's terminal.

- In the Cloud console, navigate to Navigation menu (

) > Compute Engine > VM instances.

- Click nic0 within the Internal IP address of vm-appliance to open the Network interface details page.

- Verify that nic0 is attached to privatesubnet-us, is assigned an internal IP address within that subnet (172.16.0.0/24), and has applicable firewall rules.

- Click nic0 and select nic1.

- Verify that nic1 is attached to managementsubnet-us, is assigned an internal IP address within that subnet (10.130.0.0/20), and has applicable firewall rules.

- Click nic1 and select nic2.

- Verify that nic2 is attached to mynetwork, is assigned an internal IP address within that subnet (10.128.0.0/20), and has applicable firewall rules.

- In the Cloud console, navigate to Navigation menu > Compute Engine > VM instances.

- For vm-appliance, click SSH to launch a terminal and connect.

- Run the following, to list the network interfaces within the VM instance:

The output should look like this:

Explore the network interface connectivity

Demonstrate that the vm-appliance instance is connected to privatesubnet-us, managementsubnet-us and mynetwork by pinging VM instances on those subnets.

- In the Cloud console, navigate to Navigation menu > Compute Engine > VM instances.

- Note the internal IP addresses for privatenet-us-vm, managementnet-us-vm, mynet-us-vm, and mynet-eu-vm.

- Return to the SSH terminal for vm-appliance.

- To test connectivity to privatenet-us-vm's internal IP, run the following command, replacing privatenet-us-vm's internal IP:

This works!

- Repeat the same test by running the following:

- To test connectivity to managementnet-us-vm's internal IP, run the following command, replacing managementnet-us-vm's internal IP:

This works!

- To test connectivity to mynet-us-vm's internal IP, run the following command, replacing mynet-us-vm's internal IP:

This works!

- To test connectivity to mynet-eu-vm's internal IP, run the following command, replacing mynet-eu-vm's internal IP:

- To list the routes for vm-appliance instance, run the following command:

The output should look like this:

Congratulations!

In this lab you created a VM instance with three network interfaces and verified internal connectivity for VM instances that are on the subnets that are attached to the multiple interface VM.

You also explored the default network along with its subnets, routes, and firewall rules. You then created tested connectivity for a new auto mode VPC network.

Next steps / Learn more

To learn more about VPC networking, see Using VPC Networks.

Google Cloud training and certification

...helps you make the most of Google Cloud technologies. Our classes include technical skills and best practices to help you get up to speed quickly and continue your learning journey. We offer fundamental to advanced level training, with on-demand, live, and virtual options to suit your busy schedule. Certifications help you validate and prove your skill and expertise in Google Cloud technologies.

Manual last updated April 03, 2024

Lab last tested April 02, 2024

Copyright 2024 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.