Checkpoints

Enable the Cloud Profiler API

/ 100

Optimizing Applications Using Cloud Profiler

- GSP976

- Introduction

- Setup

- Scenario

- Task 1. Download and run the sample Application

- Task 2. Using CPU time profiles to maximize queries per second

- Task 3. Modifying the application

- Task 4. Evaluate the change

- Task 5. Using allocated heap profiles to improve resource usage

- Task 6. Modifying the application

- Task 7. Evaluating the change

- Congratulations!

- End your lab

GSP976

Introduction

In this lab, you will deploy an inefficient Go application that is configured to collect profile data. You will learn how to use Cloud Profiler to view the application's profile data and identify potential optimizations. Finally you will evaluate approaches to modify the application, re-deploy it and evaluate the effect of the modifications made.

Objectives

In this lab, you will learn how to:

- Use Cloud Profiler to understand CPU cycle times of an ineffecient application

- Maximize the number of queries per second a server can process

- Reduce the memory usage of an application by eliminating unnecessary memory allocations

Setup

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources will be made available to you.

This hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

- Time to complete the lab---remember, once you start, you cannot pause a lab.

How to start your lab and sign in to the Google Cloud console

-

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is the Lab Details panel with the following:

- The Open Google Cloud console button

- Time remaining

- The temporary credentials that you must use for this lab

- Other information, if needed, to step through this lab

-

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account. -

If necessary, copy the Username below and paste it into the Sign in dialog.

{{{user_0.username | "Username"}}} You can also find the Username in the Lab Details panel.

-

Click Next.

-

Copy the Password below and paste it into the Welcome dialog.

{{{user_0.password | "Password"}}} You can also find the Password in the Lab Details panel.

-

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials. Note: Using your own Google Cloud account for this lab may incur extra charges. -

Click through the subsequent pages:

- Accept the terms and conditions.

- Do not add recovery options or two-factor authentication (because this is a temporary account).

- Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

Activate Cloud Shell

Cloud Shell is a virtual machine that is loaded with development tools. It offers a persistent 5GB home directory and runs on the Google Cloud. Cloud Shell provides command-line access to your Google Cloud resources.

- Click Activate Cloud Shell

at the top of the Google Cloud console.

When you are connected, you are already authenticated, and the project is set to your Project_ID,

gcloud is the command-line tool for Google Cloud. It comes pre-installed on Cloud Shell and supports tab-completion.

- (Optional) You can list the active account name with this command:

- Click Authorize.

Output:

- (Optional) You can list the project ID with this command:

Output:

gcloud, in Google Cloud, refer to the gcloud CLI overview guide.

Scenario

In this scenario, the server uses a gRPC framework, receives a word or phrase, and returns the number of times the word or phrase appears in the works of Shakespeare.

The average number of queries per second that the server can handle is determined by load testing the server. For each round of tests, a client simulator is called and instructed to issue 20 sequential queries. At the completion of a round, the number of queries sent by the client simulator, the elapsed time, and the average number of queries per second are displayed. The server code is inefficient (by design) for improvements to be made.

Task 1. Download and run the sample Application

In this lab, the sample application's execution time is just long enough to collect profile data. In practice, it's desirable to have at least 10 profiles before analyzing profile data.

- In Cloud Shell, run the following command:

- Run the application with the version set to

1and the number of rounds set to15:

If prompted, click Authorize on the modal dialog.

- Use the top search to enter

Profilerand select the corresponding service from the drop down when it appers.

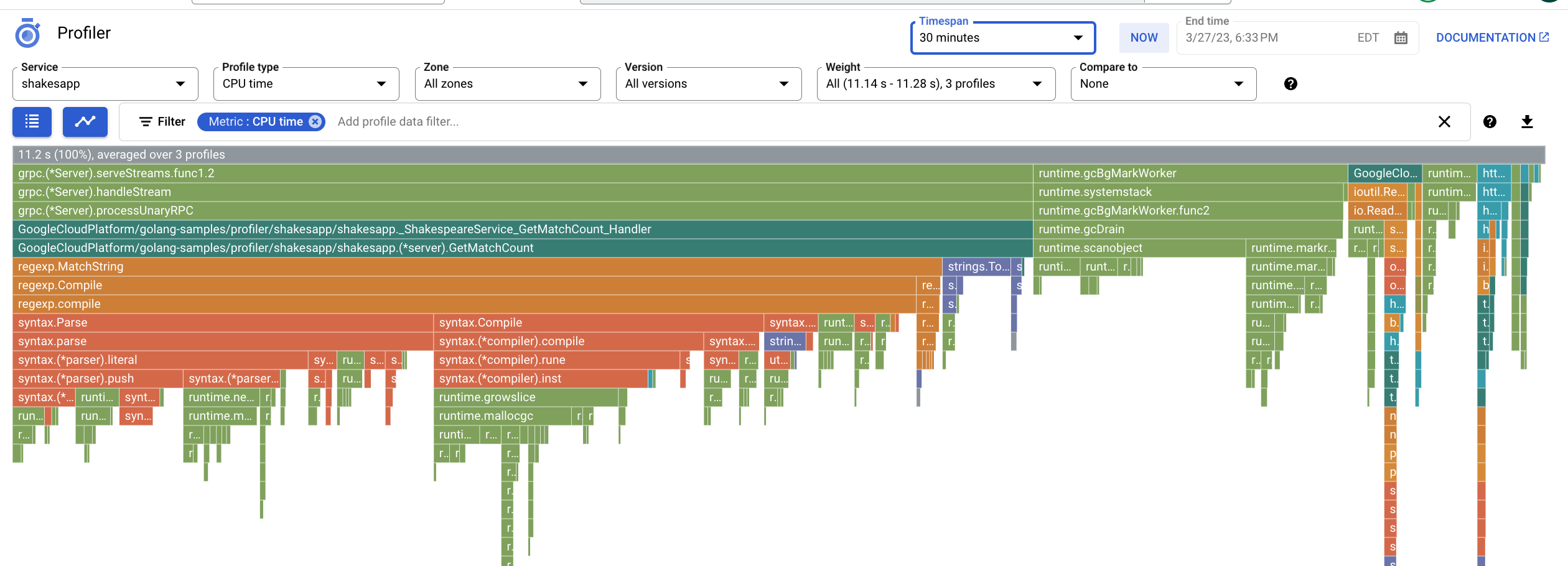

- Once the application run completes you will see a Flame Graph similar to the figure below displaying the application's profile data. Flame graphs make efficient use of screen real estate by representing a large amount of information in a compact, readable format. You can read more about how Cloud Profiler creates flame graphs on the Flame graphs documentation page.

Notice that the Profile type is set to CPU time. This indicates that CPU usage data is displayed on the flame graph presented.

- The output after the application run in Cloud Shell should look similar to the following:

The output from Cloud Shell displays the elapsed time for each iteration and the average request rate.

When the application is started, the entry "Simulated 20 requests in 17.3s, rate of 1.156069 reqs / sec" indicates that the server is executing about 1 request per second.

By the last round, the entry "Simulated 20 requests in 1m48.03s, rate of 0.185134 reqs / sec" indicates that the server is executing about 1 request every 5 seconds.

Click Check my progress to verify the objective.

Task 2. Using CPU time profiles to maximize queries per second

One approach to maximizing the number of queries per second is to identify CPU intensive methods and optimize their implementations. In this section, you use CPU time profiles to identify a CPU intensive method on the server.

Identifying CPU time usage

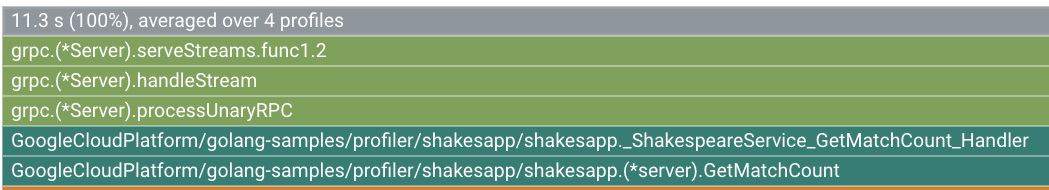

The root frame of the flame graph lists the total CPU time used by the application over the collection interval of 10 seconds:

In the example above, the service used 11.3s. When the system runs on a single core, a CPU time usage of 11.3 seconds corresponds to 113% utilization of that core! For more information on profiling, see the types of profiling available.

Task 3. Modifying the application

Step 1: Which function is CPU time intensive?

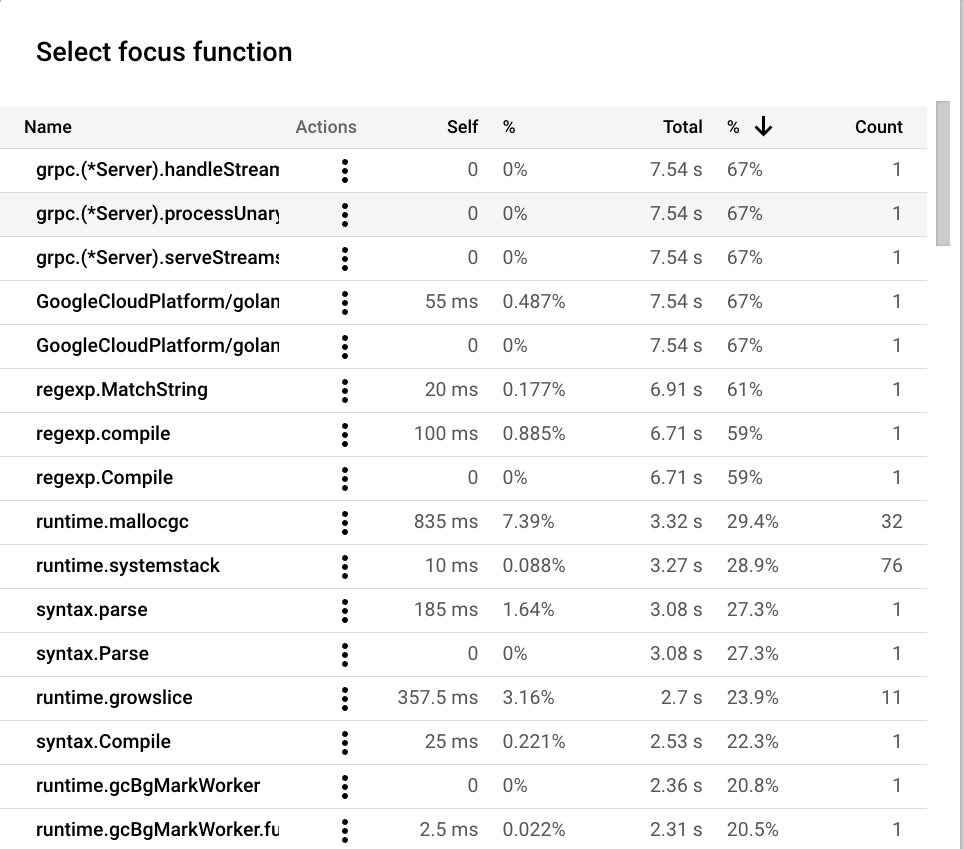

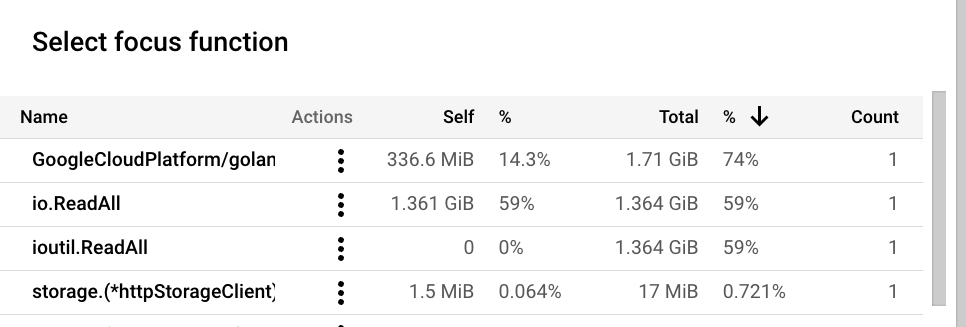

One way you can identify code that might need to be optimized is to view the table of functions and identify greedy functions:

- To view the table, click Focus function list.

- Sort the table by Total. The column labeled Total shows the CPU time usage of a function and its children.

In this example, GetMatchCount is the first shakesapp/server.go function that is listed. That function used 7.54s of the total CPU time, or 67% of the applications total CPU time. This function is known to be handling the gRPC requests.

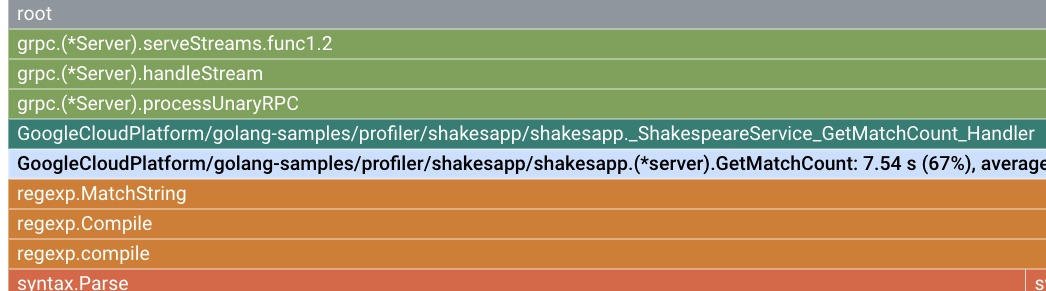

The flame graph shows that the shakesapp/server.go function GetMatchCount calls MatchString, which in turn is spending most of its time calling Compile:

Step 2: How can you use what you've learned?

-

Rely on your language expertise.

MatchStringis a regular-expression method. In general, regular-expression processing is very flexible, but not necessarily the most efficient solution for every problem. -

Rely on your application expertise. The client is generating a word or phrase, and the server is searching for this phrase.

-

Search the implementation of the

shakesapp/server.gomethodGetMatchCountfor uses ofMatchString, and then determine if a simpler, more efficient function could replace that call.

Step 3: How can you change the application?

In the file shakesapp/server.go, the existing code contains one call to MatchString:

One option is to replace the MatchString logic with equivalent logic that uses strings.Contains.

Click the Open Editor button at the top of the Cloud Shell and open the server.go file in the directory /profiler/shakesapp/shakesapp/server.go when the editor opens. Replace the MatchString logic shown above with the snippet below then save the file.

Task 4. Evaluate the change

To evaluate the change, do the following:

- Run the following command in Cloud Shell to set the application version to

2:

- Wait for the application to complete, and then view the profile data for this version of the application:

- Click NOW to load the most recent profile data. For more information, see Range of time.

- In the Version menu, select 2.

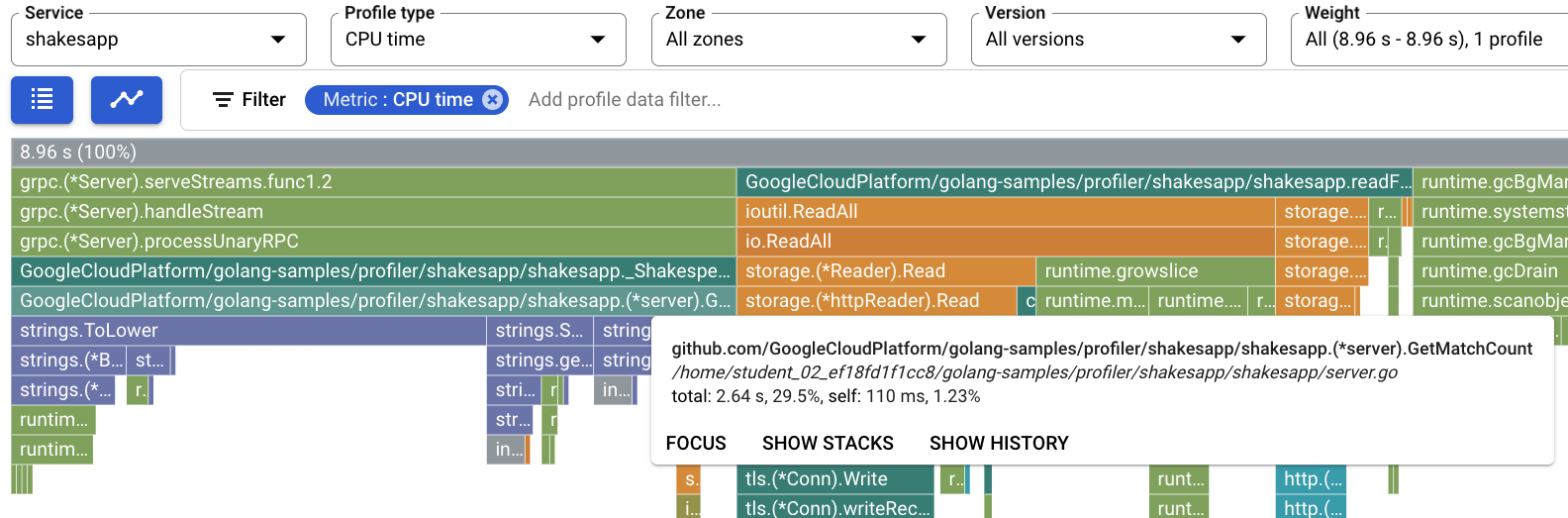

For one example, the flame graph is as shown:

In this figure, the root frame shows a value of 8.96 s. As a result of changing the string-match function, the CPU time used by the application decreased from 11.3 seconds to 8.96 seconds, or the application went from using 113% of a CPU core to using 89.6% of a CPU core.

The frame width is a proportional measure of the CPU time usage. In this example, the width of the frame for GetMatchCount indicates that function uses about 29.5% of all CPU time used by the application. In the original flame graph, this same frame was about 67% of the width of the graph. To view the exact CPU time usage, you can use the frame tooltip or you can use the Focus function list:

The small change to the application had two different effects:

- The number of requests per second increased from less than 1 per second to greater than 5 per second.

- The CPU time per request, computed by dividing the CPU utilization by the the number of requests per second, decreased.

Task 5. Using allocated heap profiles to improve resource usage

In this task, you will learn how to use the heap and allocated heap profiles to identify an allocation-intensive method in the application.

- Heap profiles show the amount of memory allocated in the program's heap at the instant the profile is collected.

- Allocated heap profiles show the total amount of memory that was allocated in the program's heap during the interval in which the profile was collected. By dividing these values by 10 seconds, the profile collection interval, you can interpret these as allocation rates.

Enabling heap profile collection

- Back in Cloud Shell, run the application with the application version set to

3and enable the collection of heap and allocated heap profiles.

- Wait for the application to complete, and then view the profile data for this version of the application:

- Click NOW to load the most recent profile data.

- In the Version menu, select 3.

- In the Profiler type menu, select Allocated heap.

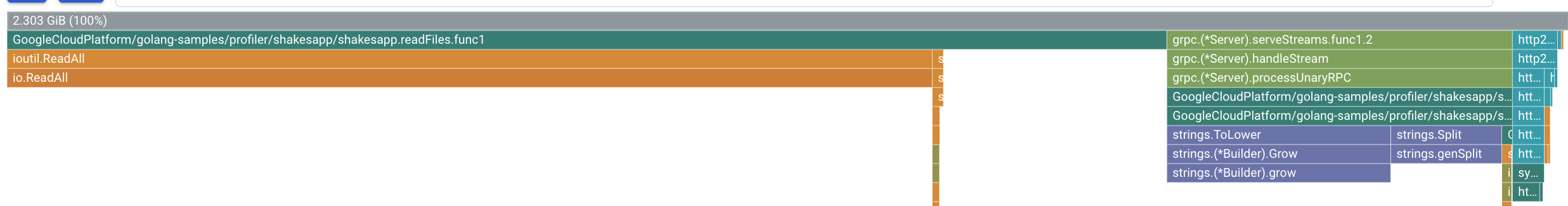

For one example, the flame graph is as shown:

Identifying the heap allocation rate

The root frame displays the total amount of heap that was allocated during the 10 seconds when a profile was collected, averaged over all profiles. In this example, the root frame shows that, on average, 2.303 GiB of memory was allocated.

Task 6. Modifying the application

Step 1: Is it worth minimizing the rate of heap allocation?

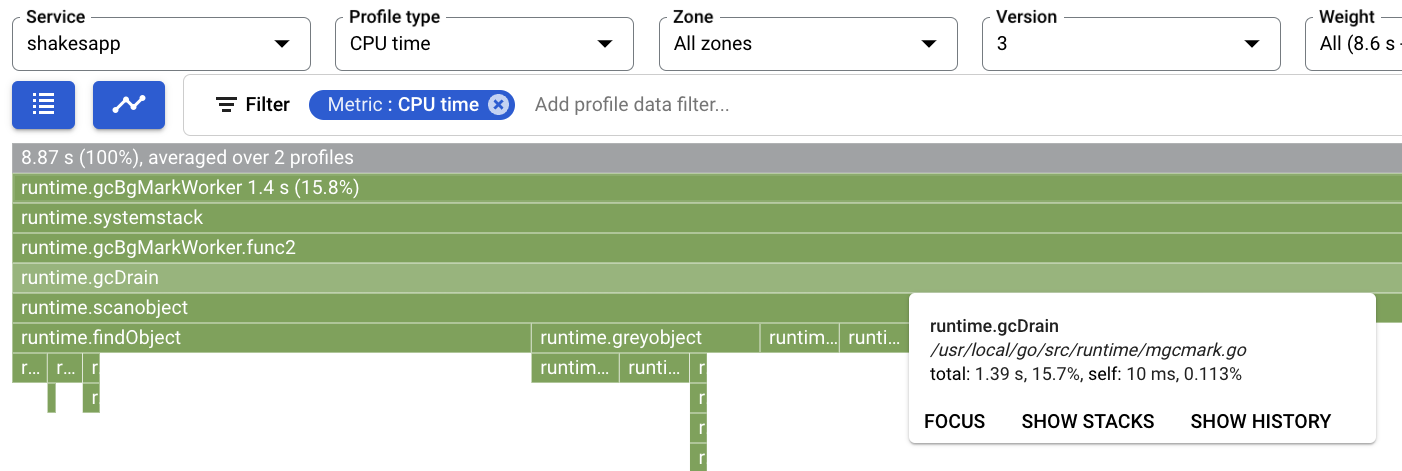

The CPU time usage of the Go background garbage collection function, runtime.gcBgMarkWorker.*, can be used to determine if it's worth the effort to optimize an application to reduce garbage collection costs:

- Skip optimization if the CPU time usage is less than 5%.

- Optimize if the CPU time usage is at least 25%.

For this example, the CPU time usage of the background garbage collector is 15.8%. This value is high enough that it's worth attempting to optimize shakesapp/server.go:

Step 2: Which function allocates a lot of memory?

The file shakesapp/server.go contains two functions that might be targets for optimization: GetMatchCount and readFiles. To determine the rate of memory allocation for these functions, set the Profile type to Allocated heap, and then use the list Focus function list.

In this example, the total heap allocation for readFiles.func1 during the 10 second profile collection is, on average, 1.71 GiB or 74% of the total allocated memory. The self heap allocation during the 10 second profile collection is 336.6 MiB:

In this example, the Go method io.ReadAll allocated 1.361 GiB during the 10 second profile collection, on average. The simplest way to reduce these allocations is to reduce calls to io.ReadAll. The function readFiles calls io.ReadAll through a library method.

Step 3: How can you change the application?

One option is to modify the application to read the files one time and then to re-use that content. For example, you could make the following changes. Open Cloud Shell and then Cloud Editor similar to the previous task to modify the server.go file. This should be located at the path golang-samples/profiler/shakesapp/shakesapp:

- Define a global variable

filesto store the results of the initial file read:

Place this under the package imports at the top of the server.go file.

- Modify

readFilesto return early when files is defined:

Replace the logic below:

With the snippet following snippet:

After making changes in the readFiles function, the code should look like this.

Save the file and click Open Terminal to go back to Cloud Shell.

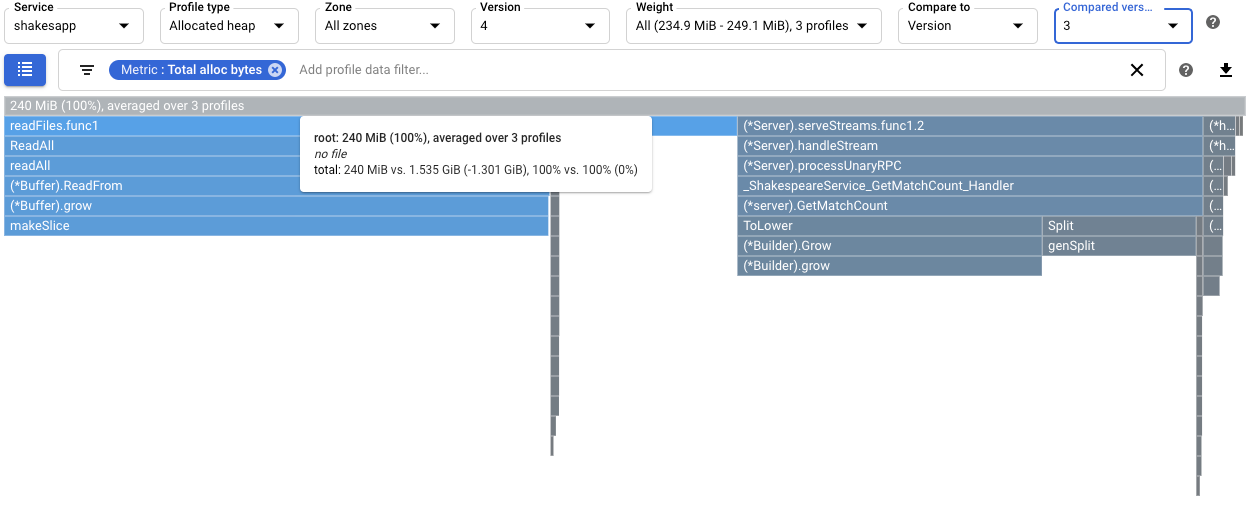

Task 7. Evaluating the change

To evaluate the change, do the following:

- Run the application with the application version set to

4:

- Wait for the application to complete, and then view the profile data for this version of the application:

- Click NOW to load the most recent profile data.

- In the Version menu, select 4.

- In the Profiler type menu, select Allocated heap.

- To quantify the effect of changing

readFileson the heap allocation rate, compare the allocated heap profiles for version4to those collected for3:

-

In this example, the root frame's tooltip shows that with version 4, the average amount of memory allocated during profile collection decreased by 1.301 GiB, as compared to version 3. The tooltip for

readFiles.func1shows a decrease of 1.045 GiB: -

To determine if there is an impact of the change on the number of requests per second handled by the application, view the output in the Cloud Shell.

In the example below, version 4 completes up to 15 requests per second, which is substantially higher than the ~5 requests per second of version 3:

The increase in queries per second served by the application might be due to less time being spent on garbage collection.

Congratulations!

In this lab, you explored the Cloud Operations suite, which allows Site Reliability Engineers (SRE) to investigate and diagnose issues experienced with workloads deployed.

CPU time and allocated heap profiles were used to identify potential optimizations to an application. The goals were to maximize the number of requests per second and to eliminate unnecessary allocations.

By using CPU time profiles, a CPU intensive function was identified. After applying a simple change, the server's request rate increased.

By using allocated heap profiles, the shakesapp/server.go function readFiles was identified as having a high allocation rate. After optimizing readFiles, the server's request rate increased to 15 requests per second.

Finish your quest

This self-paced lab is part of the Cloud Architecture and DevOps Essentials quests. A quest is a series of related labs that form a learning path. Completing a quest earns you a badge to recognize your achievement. You can make your badge or badges public and link to them in your online resume or social media account. Enroll in any quest that contains this lab and get immediate completion credit. Refer to the Google Cloud Skills Boost catalog for all available quests.

Take your next lab

Continue your quest or check out these suggested materials:

- Practice and learn Cloud Operations on Google Cloud with the Cloud Operations Sandbox.

- Monitoring and Logging for Cloud Functions

Next steps

- Learn more about the Google Cloud Operations Suite.

Google Cloud training and certification

...helps you make the most of Google Cloud technologies. Our classes include technical skills and best practices to help you get up to speed quickly and continue your learning journey. We offer fundamental to advanced level training, with on-demand, live, and virtual options to suit your busy schedule. Certifications help you validate and prove your skill and expertise in Google Cloud technologies.

Manual Last Updated: August 16, 2023

Lab Last Tested: August 16, 2023

End your lab

When you have completed your lab, click End Lab. Your account and the resources you've used are removed from the lab platform.

You will be given an opportunity to rate the lab experience. Select the applicable number of stars, type a comment, and then click Submit.

The number of stars indicates the following:

- 1 star = Very dissatisfied

- 2 stars = Dissatisfied

- 3 stars = Neutral

- 4 stars = Satisfied

- 5 stars = Very satisfied

You can close the dialog box if you don't want to provide feedback.

For feedback, suggestions, or corrections, please use the Support tab.

Copyright 2024 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.