GSP1226

Introduction

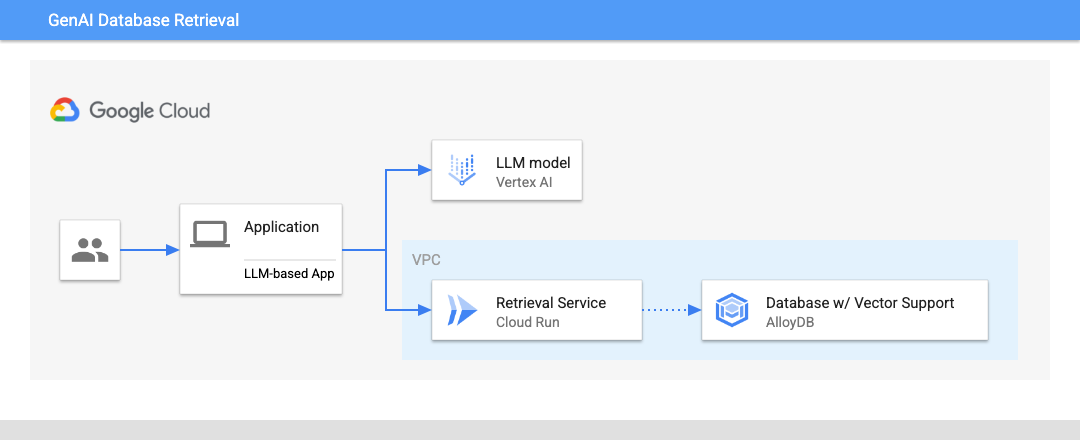

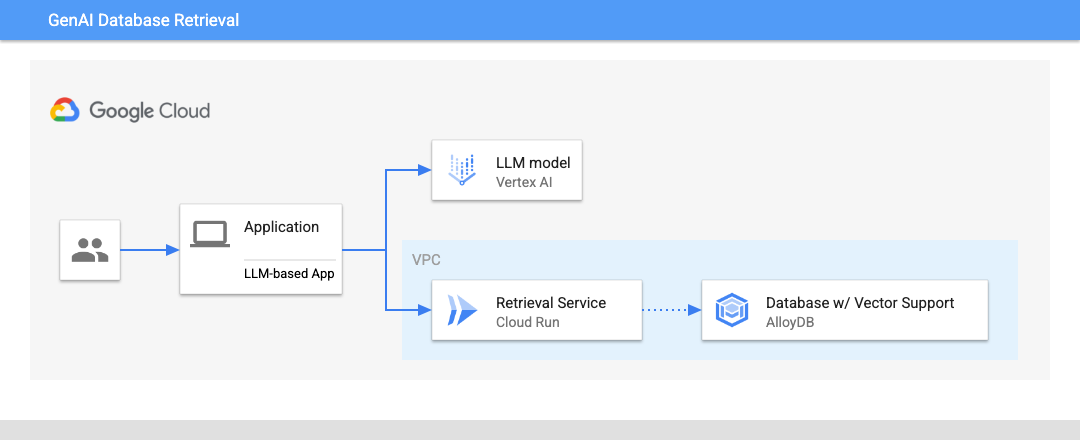

In this codelab you will learn how to deploy the GenAI Databases Retrieval Service and create a sample interactive application using the deployed environment.

You can get more information about the GenAI Retrieval Service and the sample application here.

Prerequisites

- A basic understanding of the Google Cloud Console

- Basic skills in command line interface and Google Cloud shell

What you'll learn

- How to connect to the AlloyDB

- How to configure and deploy GenAI Databases Retrieval Service

- How to deploy a sample application using the deployed service

What you'll need

- A Google Cloud Account and Google Cloud Project

- A web browser such as Chrome

Setup and Requirements

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources will be made available to you.

This hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

Note: Use an Incognito or private browser window to run this lab. This prevents any conflicts between your personal account and the Student account, which may cause extra charges incurred to your personal account.

- Time to complete the lab---remember, once you start, you cannot pause a lab.

Note: If you already have your own personal Google Cloud account or project, do not use it for this lab to avoid extra charges to your account.

How to start your lab and sign in to the Google Cloud console

-

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method.

On the left is the Lab Details panel with the following:

- The Open Google Cloud console button

- Time remaining

- The temporary credentials that you must use for this lab

- Other information, if needed, to step through this lab

-

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account.

-

If necessary, copy the Username below and paste it into the Sign in dialog.

{{{user_0.username | "Username"}}}

You can also find the Username in the Lab Details panel.

-

Click Next.

-

Copy the Password below and paste it into the Welcome dialog.

{{{user_0.password | "Password"}}}

You can also find the Password in the Lab Details panel.

-

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials.

Note: Using your own Google Cloud account for this lab may incur extra charges.

-

Click through the subsequent pages:

- Accept the terms and conditions.

- Do not add recovery options or two-factor authentication (because this is a temporary account).

- Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

Note: To view a menu with a list of Google Cloud products and services, click the Navigation menu at the top-left.

Activate Cloud Shell

Cloud Shell is a virtual machine that is loaded with development tools. It offers a persistent 5GB home directory and runs on the Google Cloud. Cloud Shell provides command-line access to your Google Cloud resources.

- Click Activate Cloud Shell

at the top of the Google Cloud console.

at the top of the Google Cloud console.

When you are connected, you are already authenticated, and the project is set to your Project_ID, . The output contains a line that declares the Project_ID for this session:

Your Cloud Platform project in this session is set to {{{project_0.project_id | "PROJECT_ID"}}}

gcloud is the command-line tool for Google Cloud. It comes pre-installed on Cloud Shell and supports tab-completion.

- (Optional) You can list the active account name with this command:

gcloud auth list

- Click Authorize.

Output:

ACTIVE: *

ACCOUNT: {{{user_0.username | "ACCOUNT"}}}

To set the active account, run:

$ gcloud config set account `ACCOUNT`

- (Optional) You can list the project ID with this command:

gcloud config list project

Output:

[core]

project = {{{project_0.project_id | "PROJECT_ID"}}}

Note: For full documentation of gcloud, in Google Cloud, refer to the gcloud CLI overview guide.

Task 1. Initialize the Environment

Config Cloud Shell

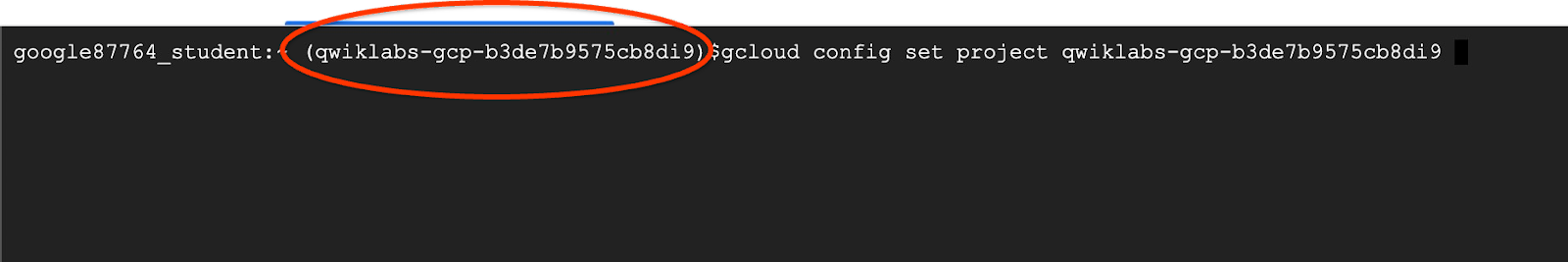

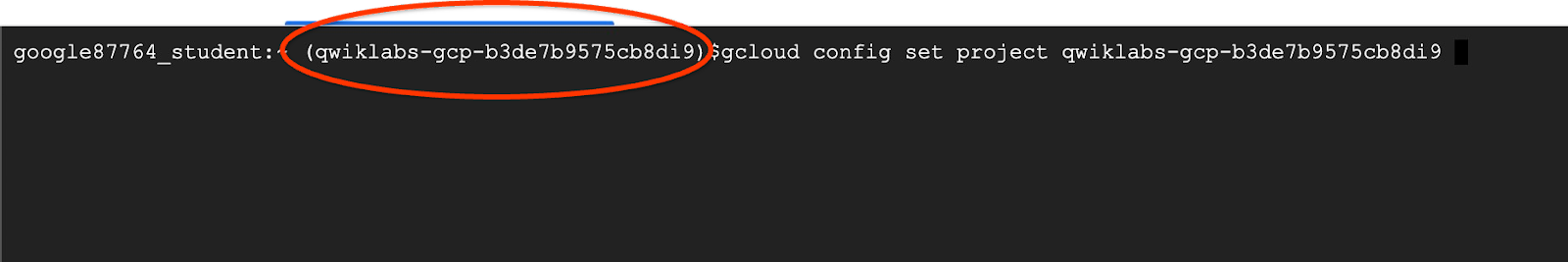

Inside Cloud Shell, make sure that your project ID is setup:

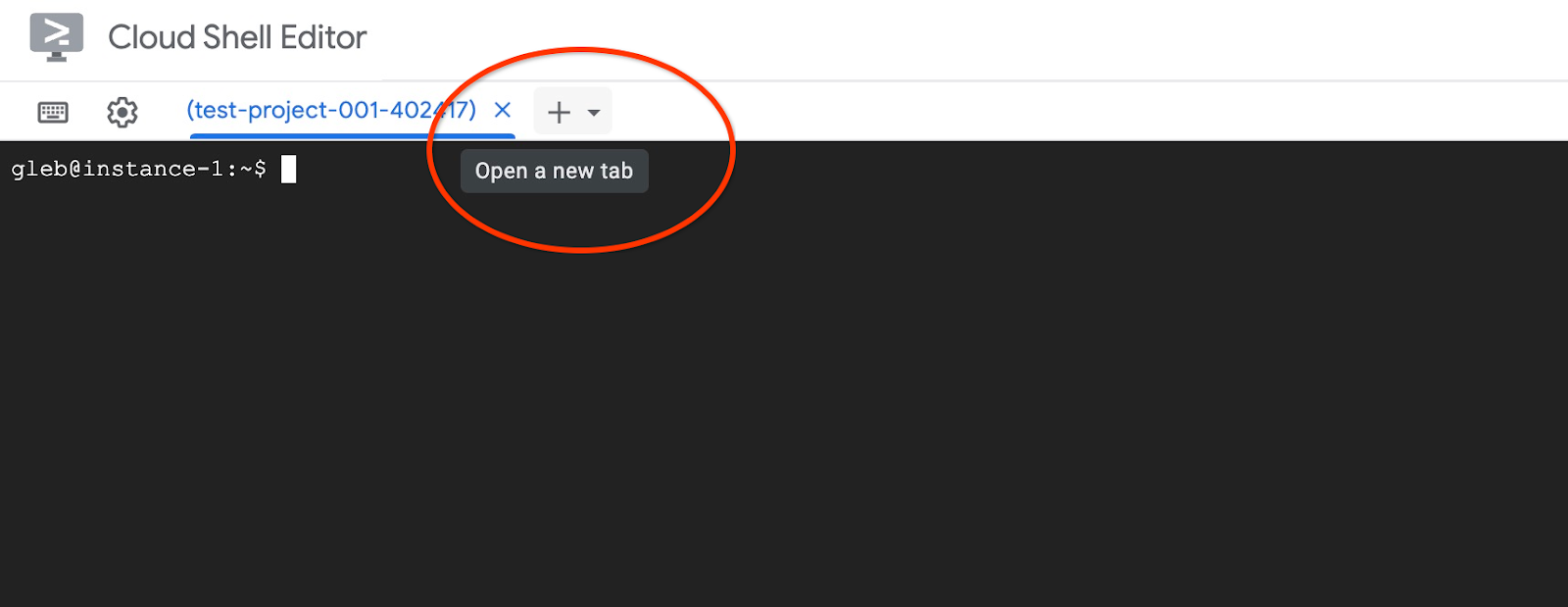

Usually the project ID is shown in parentheses in the command prompt in the cloud shell as it is shown in the picture:

Configure your default region to us-central1 to use the Vertex AI models. Read more about regional restrictions.

gcloud config set compute/region {{{project_0.default_region | REGION }}}

Install Postgres Client

Install the PostgreSQL client software on the deployed VM

Connect to the VM:

Note: First time the SSH connection to the VM can take longer since the process includes creation of RSA key for secure connection and propagating the public part of the key to the project

gcloud compute ssh instance-1 --zone={{{project_0.default_zone | ZONE }}}

Expected console output:

student@cloudshell:~ (test-project-402417)$ gcloud compute ssh instance-1 --zone={{{ project_0.default_zone | ZONE }}}

Updating project ssh metadata...working..Updated [https://www.googleapis.com/compute/v1/projects/test-project-402417].

Updating project ssh metadata...done.

Waiting for SSH key to propagate.

Warning: Permanently added 'compute.5110295539541121102' (ECDSA) to the list of known hosts.

Linux instance-1 5.10.0-26-cloud-amd64 #1 SMP Debian 5.10.197-1 (2023-09-29) x86_64

The programs included with the Debian GNU/Linux system are free software; the exact distribution terms for each program are described in the individual files in /usr/share/doc/*/copyright.

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent permitted by applicable law.

student@instance-1:~$

Install the software running command inside the VM:

sudo apt-get update

sudo apt-get install --yes postgresql-client

Expected console output:

student@instance-1:~$ sudo apt-get update

sudo apt-get install --yes postgresql-client

Get:1 https://packages.cloud.google.com/apt google-compute-engine-bullseye-stable InRelease [5146 B]

Get:2 https://packages.cloud.google.com/apt cloud-sdk-bullseye InRelease [6406 B]

Hit:3 https://deb.debian.org/debian bullseye InRelease

Get:4 https://deb.debian.org/debian-security bullseye-security InRelease [48.4 kB]

Get:5 https://packages.cloud.google.com/apt google-compute-engine-bullseye-stable/main amd64 Packages [1930 B]

Get:6 https://deb.debian.org/debian bullseye-updates InRelease [44.1 kB]

Get:7 https://deb.debian.org/debian bullseye-backports InRelease [49.0 kB]

...redacted...

update-alternatives: using /usr/share/postgresql/13/man/man1/psql.1.gz to provide /usr/share/man/man1/psql.1.gz (psql.1.gz) in auto mode

Setting up postgresql-client (13+225) ...

Processing triggers for man-db (2.9.4-2) ...

Processing triggers for libc-bin (2.31-13+deb11u7) ...

Connect to the Instance

Connect to the primary instance from the VM using psql.

Continue with the opened SSH session to your VM. If you have been disconnected then connect again using the same command as above.

Use the previously noted $PGASSWORD and the cluster name to connect to AlloyDB from the GCE VM:

export PGPASSWORD={{{project_0.startup_script.gcp_alloydb_password | PG_PASSWORD}}}

export PROJECT_ID=$(gcloud config get-value project)

export REGION={{{project_0.default_region | REGION }}}

export ADBCLUSTER={{{project_0.startup_script.gcp_alloydb_cluster_name | CLUSTER}}}

export INSTANCE_IP=$(gcloud alloydb instances describe $ADBCLUSTER-pr --cluster=$ADBCLUSTER --region=$REGION --format="value(ipAddress)")

psql "host=$INSTANCE_IP user=postgres sslmode=require"

Expected console output:

student@instance-1:~$ export PGPASSWORD=P9...

student@instance-1:~$ export REGION=us-central1

student@instance-1:~$ export ADBCLUSTER=alloydb-aip-01

student@instance-1:~$ export INSTANCE_IP=export INSTANCE_IP=$(gcloud alloydb instances describe $ADBCLUSTER-pr --cluster=$ADBCLUSTER --region=$REGION --format="value(ipAddress)")

student@instance-1:~$ psql "host=$INSTANCE_IP user=postgres sslmode=require"

psql (13.11 (Debian 13.11-0+deb11u1), server 14.7)

WARNING: psql major version 13, server major version 14.

Some psql features might not work.

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, bits: 256, compression: off)

Type "help" for help.

postgres=>

Exit from the psql session keeping the SSH connection up:

exit

Expected console output:

postgres=> exit

student@instance-1:~$

Task 2. Initialize the database

We are going to use our client VM as a platform to populate our database with data and host our application. The first step is to create a database and populate it with data.

Create Database

In the GCE VM session execute:

Note: If your SSH session was terminated you need to reset your environment variables such as:

export PGPASSWORD=

export INSTANCE_IP=

- Create a database with name "assistantdemo".

psql "host=$INSTANCE_IP user=postgres" -c "CREATE DATABASE assistantdemo"

- Expected console output:

student@instance-1:~$ psql "host=$INSTANCE_IP user=postgres" -c "CREATE DATABASE assistantdemo"

CREATE DATABASE

student@instance-1:~$

- Enable pgVector extension.

psql "host=$INSTANCE_IP user=postgres dbname=assistantdemo" -c "CREATE EXTENSION vector"

- Expected console output:

student@instance-1:~$ psql "host=$INSTANCE_IP user=postgres dbname=assistantdemo" -c "CREATE EXTENSION vector"

CREATE EXTENSION

student@instance-1:~$

Task 3. Install Python

To continue we are going to use prepared Python scripts from GitHub repository but before doing that we need to install the required software.

- In the GCE VM execute:

sudo apt install -y git build-essential libssl-dev zlib1g-dev \

libbz2-dev libreadline-dev libsqlite3-dev curl \

libncursesw5-dev xz-utils tk-dev libxml2-dev libxmlsec1-dev libffi-dev liblzma-dev

- Expected console output:

student@instance-1:~$ sudo apt install -y git build-essential libssl-dev zlib1g-dev \

libbz2-dev libreadline-dev libsqlite3-dev curl \

libncursesw5-dev xz-utils tk-dev libxml2-dev libxmlsec1-dev libffi-dev liblzma-dev

...

- In the GCE VM execute:

curl https://pyenv.run | bash

echo 'export PYENV_ROOT="$HOME/.pyenv"' >> ~/.bashrc

echo 'command -v pyenv >/dev/null || export PATH="$PYENV_ROOT/bin:$PATH"' >> ~/.bashrc

echo 'eval "$(pyenv init -)"' >> ~/.bashrc

exec "$SHELL"

- Expected console output:

curl https://pyenv.run | bash

echo 'export PYENV_ROOT="$HOME/.pyenv"' >> ~/.bashrc

echo 'command -v pyenv >/dev/null || export PATH="$PYENV_ROOT/bin:$PATH"' >> ~/.bashrc

echo 'eval "$(pyenv init -)"' >> ~/.bashrc

exec "$SHELL"

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

xz-utils is already the newest version (5.2.5-2.1~deb11u1).

The following additional packages will be installed:

...

- Install Python 3.11.x.

pyenv install 3.11.6

pyenv global 3.11.6

python -V

- Expected console output:

student@instance-1:~$ pyenv install 3.11.6

pyenv global 3.11.6

python -V

Downloading Python-3.11.6.tar.xz...

-> https://www.python.org/ftp/python/3.11.6/Python-3.11.6.tar.xz

Installing Python-3.11.6...

Installed Python-3.11.6 to /home/student/.pyenv/versions/3.11.6

Python 3.11.6

student@instance-1:~$

Task 4. Populate Database

Clone the GitHub repository with the code for the retrieval service and sample application.

- In the GCE VM execute:

git clone https://github.com/GoogleCloudPlatform/genai-databases-retrieval-app.git

- Expected console output:

student@instance-1:~$ git clone https://github.com/GoogleCloudPlatform/genai-databases-retrieval-app.git

Cloning into 'genai-databases-retrieval-app'...

remote: Enumerating objects: 525, done.

remote: Counting objects: 100% (336/336), done.

remote: Compressing objects: 100% (201/201), done.

remote: Total 525 (delta 224), reused 179 (delta 135), pack-reused 189

Receiving objects: 100% (525/525), 46.58 MiB | 16.16 MiB/s, done.

Resolving deltas: 100% (289/289), done.

- Prepare configuration file.

Note:

If your SSH session was terminated you need to set your environment variables such as:

export PGPASSWORD=

REGION=us-central1

INSTANCE_IP=$(gcloud alloydb instances describe $ADBCLUSTER-pr --cluster=$ADBCLUSTER --region=$REGION --format="value(ipAddress)")

- In the GCE VM execute:

cd genai-databases-retrieval-app/retrieval_service

cp example-config.yml config.yml

sed -i s/127.0.0.1/$INSTANCE_IP/g config.yml

sed -i s/my-password/$PGPASSWORD/g config.yml

sed -i s/my_database/assistantdemo/g config.yml

sed -i s/my-user/postgres/g config.yml

cat config.yml

- Expected console output:

student@instance-1:~$ cd genai-databases-retrieval-app/retrieval_service

cp example-config.yml config.yml

sed -i s/127.0.0.1/$INSTANCE_IP/g config.yml

sed -i s/my-password/$PGPASSWORD/g config.yml

sed -i s/my_database/assistantdemo/g config.yml

sed -i s/my-user/postgres/g config.yml

cat config.yml

host: 0.0.0.0

# port: 8080

datastore:

# Example for AlloyDB

kind: "postgres"

host: 10.65.0.2

# port: 5432

database: "assistantdemo"

user: "postgres"

password: "P9..."

- Populate database with the sample dataset.

- In the GCE VM execute:

pip install -r requirements.txt

python run_database_init.py

- Expected console output(redacted):

student@instance-1:~/genai-databases-retrieval-app/retrieval_service$ pip install -r requirements.txt

python run_database_init.py

Collecting asyncpg==0.28.0 (from -r requirements.txt (line 1))

Obtaining dependency information for asyncpg==0.28.0 from https://files.pythonhosted.org/packages/77/a4/88069f7935b14c58534442a57be3299179eb46aace2d3c8716be199ff6a6/asyncpg-0.28.0-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata

Downloading asyncpg-0.28.0-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (4.3 kB)

Collecting fastapi==0.101.1 (from -r requirements.txt (line 2))

...

database init done.

student@instance-1:~/genai-databases-retrieval-app/retrieval_service$

Task 5. Deploy the Extension Service to Cloud Run

Now we can deploy the extension service to Cloud Run.

Create Service Account

Create a service account for the extension service and grant necessary privileges.

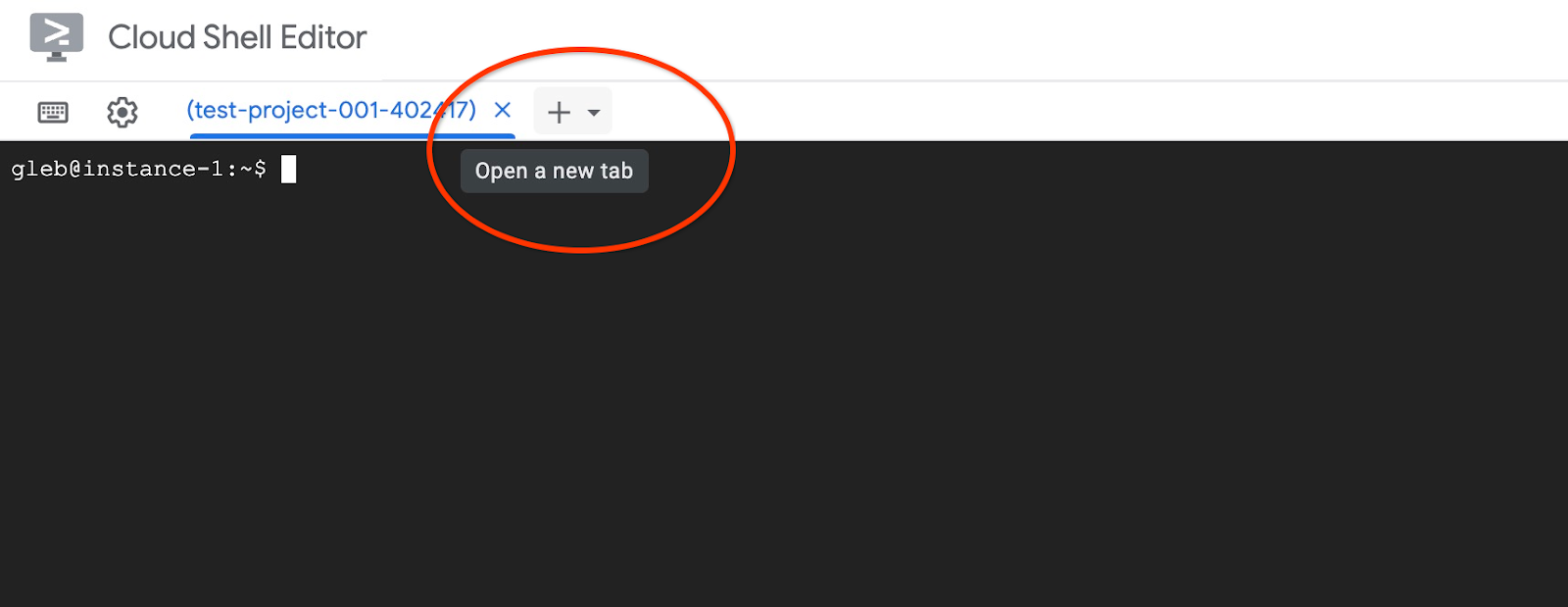

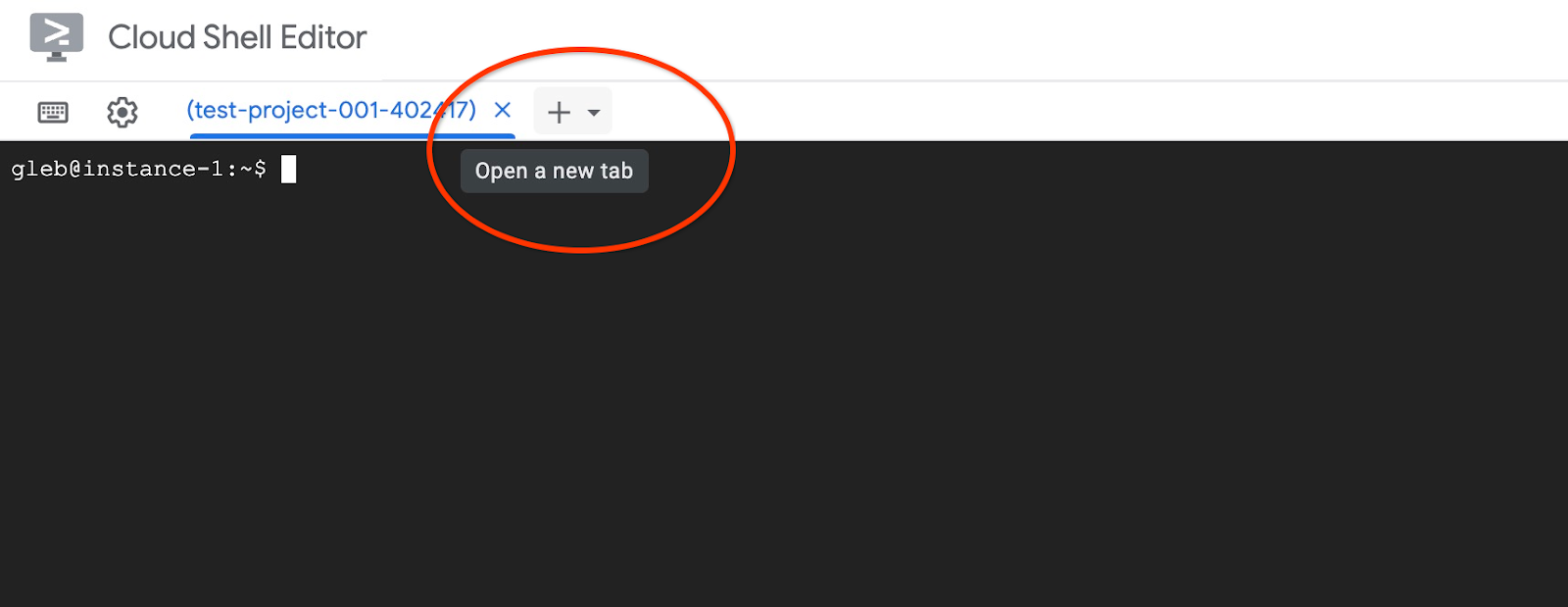

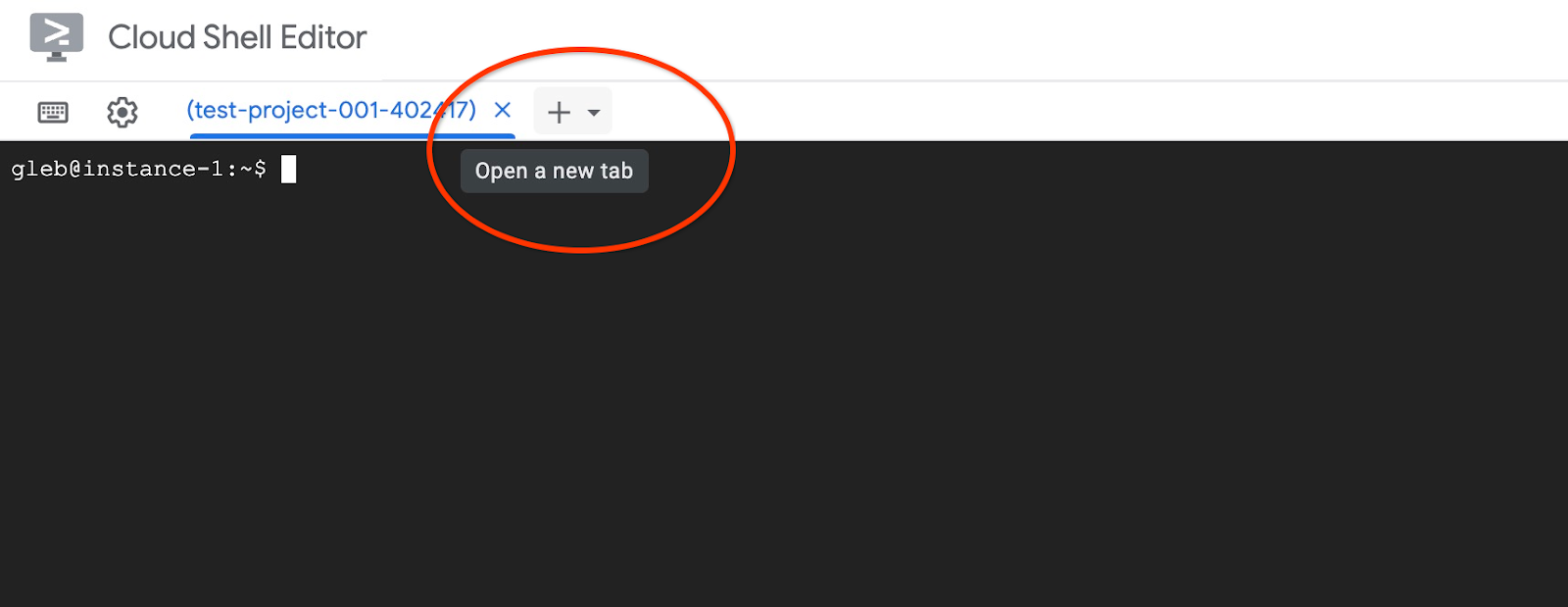

- Open another Cloud Shell tab using the sign "+" at the top.

- In the new cloud shell tab execute:

export PROJECT_ID=$(gcloud config get-value project)

gcloud iam service-accounts create retrieval-identity

gcloud projects add-iam-policy-binding $PROJECT_ID \

--member="serviceAccount:retrieval-identity@$PROJECT_ID.iam.gserviceaccount.com" \

--role="roles/aiplatform.user"

- Expected console output:

student@cloudshell:~ (gleb-test-short-003)$ gcloud iam service-accounts create retrieval-identity

Created service account [retrieval-identity].

- Close the tab by either execution command "exit" in the tab:

exit

Task 6. Deploy the extension service

Continue in the first tab where you are connected to the VM through SSH by deploying the service.

- In the VM SSH session execute:

cd ~/genai-databases-retrieval-app

gcloud alpha run deploy retrieval-service \

--source=./retrieval_service/\

--no-allow-unauthenticated \

--service-account retrieval-identity \

--region us-central1 \

--network=default \

--quiet

- Expected console output:

student@instance-1:~/genai-databases-retrieval-app$ gcloud alpha run deploy retrieval-service \

--source=./retrieval_service/\

--no-allow-unauthenticated \

--service-account retrieval-identity \

--region us-central1 \

--network=default

This command is equivalent to running `gcloud builds submit --tag [IMAGE] ./retrieval_service/` and `gcloud run deploy retrieval-service --image [IMAGE]`

Building using Dockerfile and deploying container to Cloud Run service [retrieval-service] in project [gleb-test-short-003] region [us-central1]

X Building and deploying... Done.

✓ Uploading sources...

✓ Building Container... Logs are available at [https://console.cloud.google.com/cloud-build/builds/6ebe74bf-3039-4221-b2e9-7ca8fa8dad8e?project=1012713954588].

✓ Creating Revision...

✓ Routing traffic...

Setting IAM Policy...

Completed with warnings:

Setting IAM policy failed, try "gcloud beta run services remove-iam-policy-binding --region=us-central1 --member=allUsers --role=roles/run.invoker retrieval-service"

Service [retrieval-service] revision [retrieval-service-00002-4pl] has been deployed and is serving 100 percent of traffic.

Service URL: https://retrieval-service-onme64eorq-uc.a.run.app

student@instance-1:~/genai-databases-retrieval-app$

Verify The Service

Now we can check if the service runs correctly and the VM has access to the endpoint.

- In the VM SSH session execute:

curl -H "Authorization: Bearer $(gcloud auth print-identity-token)" $(gcloud run services list --filter="(retrieval-service)" --format="value(URL)")

- Expected console output:

student@instance-1:~/genai-databases-retrieval-app$ curl -H "Authorization: Bearer $(gcloud auth print-identity-token)" $(gcloud run services list --filter="(retrieval-service)" --format="value(URL)")

{"message":"Hello World"}

student@instance-1:~/genai-databases-retrieval-app$

If we see the "Hello World" message it means our service is up and serving the requests.

Task 7. Deploy Sample Application

Now we have the extension service up and running and can deploy a sample application which is going to use the service. The application can be deployed on the VM or any other service like Cloud Run, Kubernetes or even locally on a laptop. Here we are going to show how to deploy it on the VM.

Prepare the environment

We continue to work on our VM. We need to add necessary modules to Python.

- In the VM SSH session execute:

cd ~/genai-databases-retrieval-app/llm_demo

pip install -r requirements.txt

- Expected output (redacted):

student@instance-1:~$ cd ~/genai-databases-retrieval-app/langchain_tools_demo

pip install -r requirements.txt

Collecting fastapi==0.104.0 (from -r requirements.txt (line 1))

Obtaining dependency information for fastapi==0.104.0 from https://files.pythonhosted.org/packages/db/30/b8d323119c37e15b7fa639e65e0eb7d81eb675ba166ac83e695aad3bd321/fastapi-0.104.0-py3-none-any.whl.metadata

Downloading fastapi-0.104.0-py3-none-any.whl.metadata (24 kB)

...

Run Assistant Application

Now we can start our application

- In the VM SSH session execute:

export BASE_URL=$(gcloud run services list --filter="(retrieval-service)" --format="value(URL)")

export ORCHESTRATION_TYPE=langchain-tools

python run_app.py

- Expected output (redacted):

student@instance-1:~/genai-databases-retrieval-app/langchain_tools_demo$ export BASE_URL=$(gcloud run services list --filter="(retrieval-service)" --format="value(URL)")

student@instance-1:~/genai-databases-retrieval-app/langchain_tools_demo$ python main.py

INFO: Started server process [28565]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8081 (Press CTRL+C to quit)

Connect to the Application

You have several ways to connect to the application running on the VM. For example you can open port 8081 on the VM using firewall rules in the VPC or create a load balancer with public IP. Here we are going to use a SSH tunnel to the VM translating the local port 8081 to the VM port 8081.

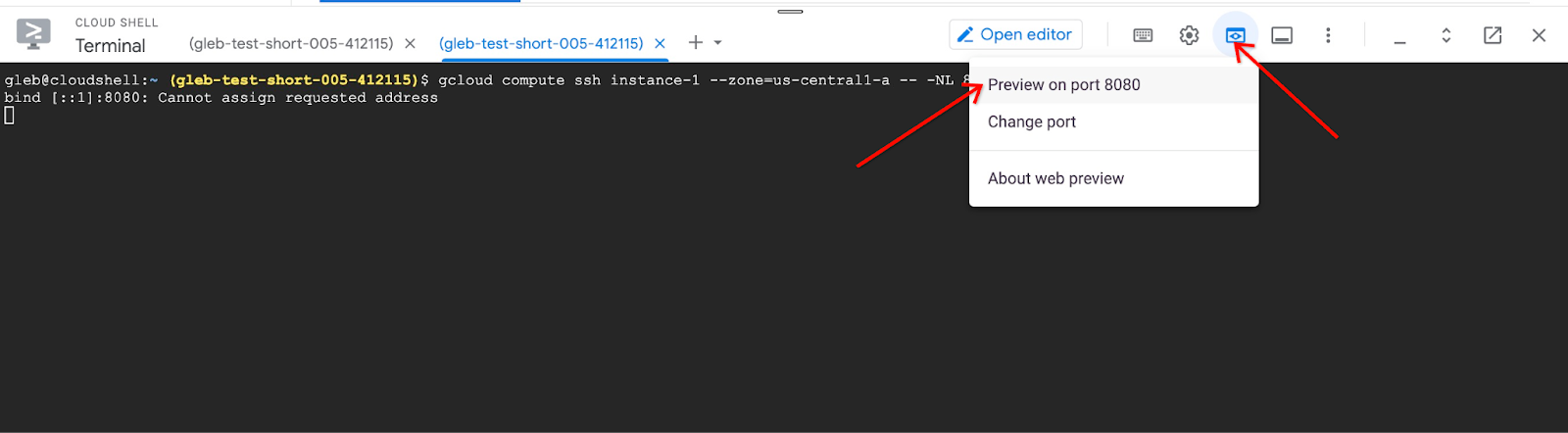

- Open another Cloud Shell tab using the sign "+" at the top.

- In the new cloud shell tab start the tunnel to your VM by executing the gcloud command:

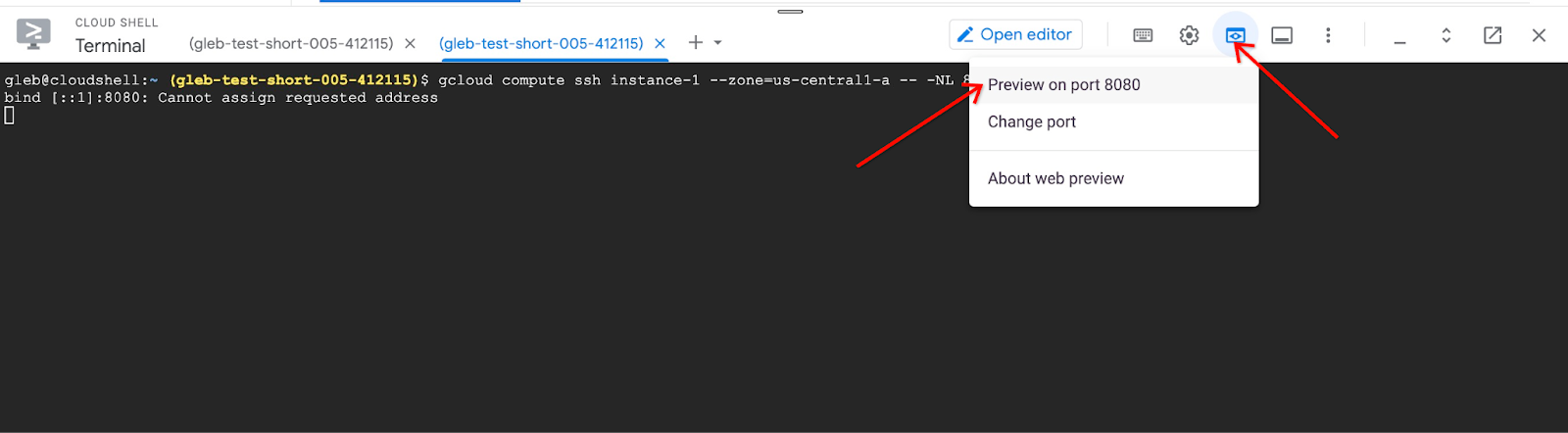

gcloud compute ssh instance-1 --zone={{{project_0.default_zone | ZONE }}} -- -NL 8080:localhost:8081

- It will show an error "Cannot assign requested address" - please ignore it.

Here is the expected output:

student@cloudshell:~ gcloud compute ssh instance-1 --zone=us-central1-a -- -NL 8080:localhost:8081

bind [::1]:8081: Cannot assign requested address

It opens port 8081 on your cloud shell which can be used for the "Web preview".

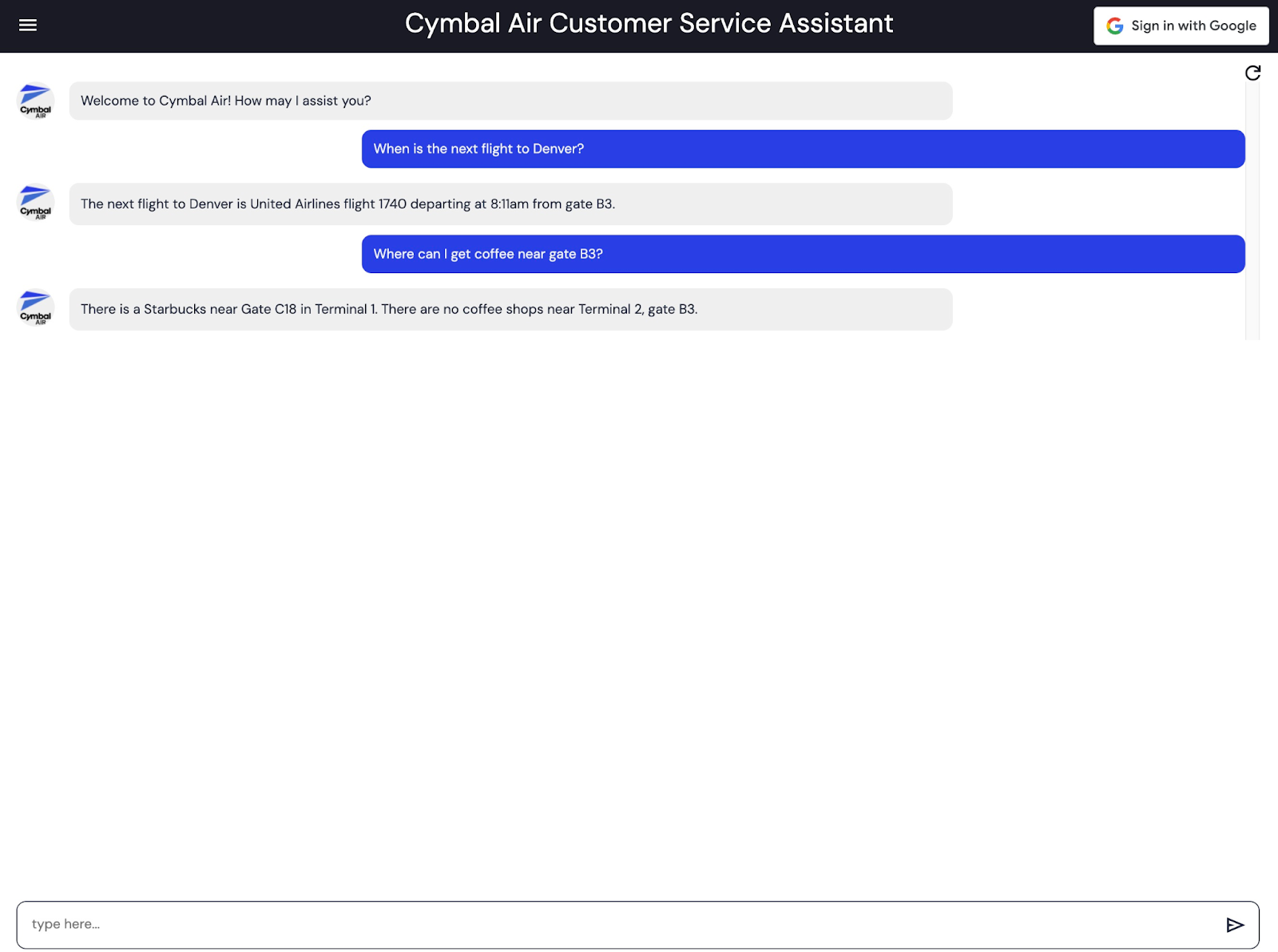

- Click on the "Web preview" button on the right top of your Cloud Shell and from the drop down menu choose "Preview on port 8080"

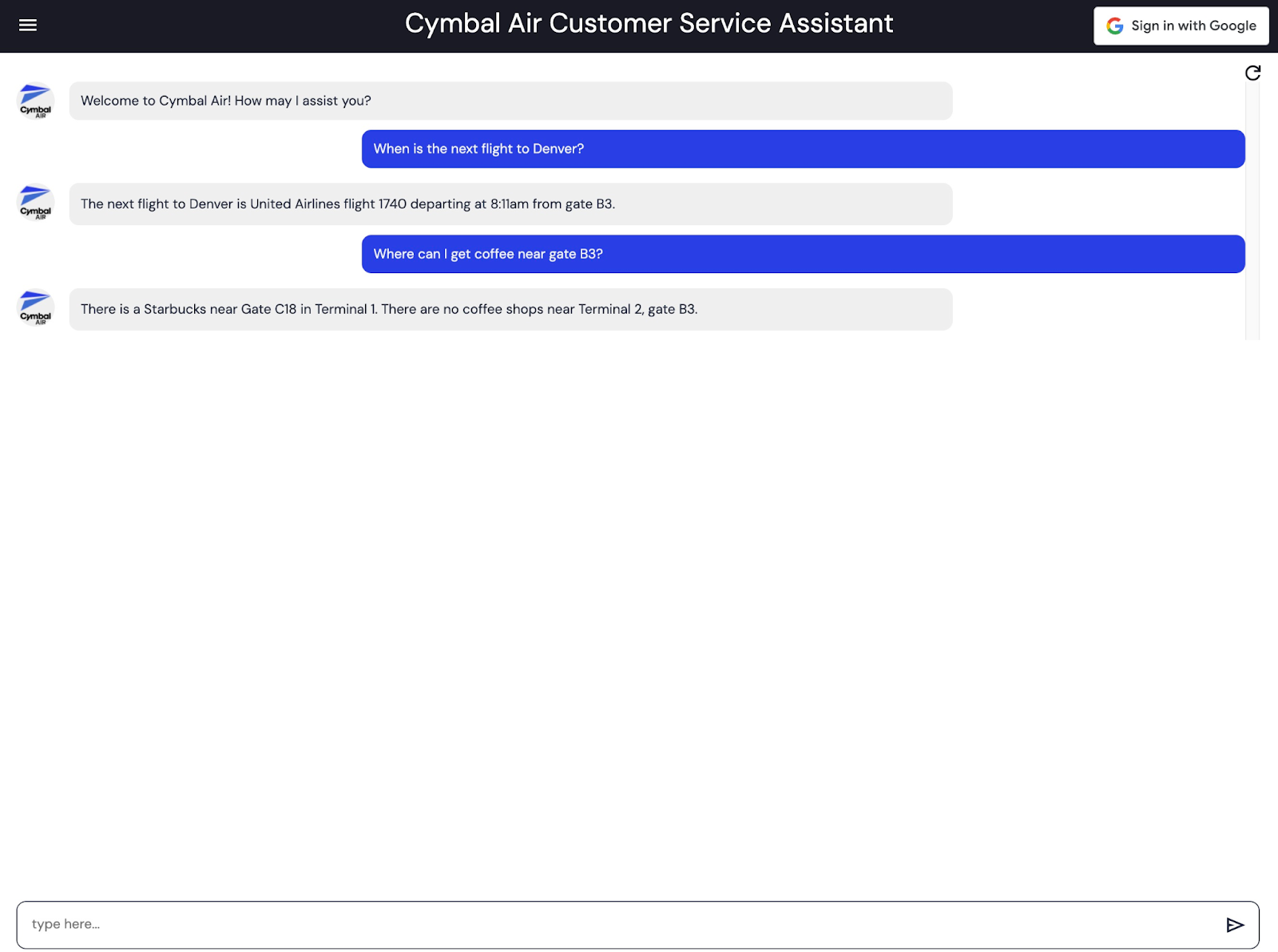

It opens a new tab in your web browser with the application interface. You should be able to see the "Cymbal Air Customer Service Assistant" page and you can post your question to the assistant at the bottom of the page.

This demo showcases the Cymbal Air customer service assistant. Cymbal Air is a fictional passenger airline. The assistant is an AI chatbot that helps travellers to manage flights and look up information about Cymbal Air's hub at San Francisco International Airport (SFO).

It can help answer users questions like:

- When is the next flight to Denver?

- Are there any luxury shops around gate D50?

- Where can I get coffee near gate A6?

- Where can I buy a gift?

The application uses the latest Google foundation models to generate responses and augment it by information about flights and amenities from the operational AlloyDB database. You can read more about this demo application on the GitHub page of the project.

Congratulations

Congratulations for completing the lab!

In this lab you create an AlloyDB and create a connection to a GenAI Database Retrieval Service.

What we've covered

- How to deploy AlloyDB Cluster

- How to connect to the AlloyDB

- How to configure and deploy GenAI Databases Retrieval Service

- How to deploy a sample application using the deployed service

Manual Last Updated Mar 11, 2024

Lab Last Tested Mar 11, 2024

Copyright 2024 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.

at the top of the Google Cloud console.